Docker网络

理解Docker 〇

[root@hadoop101 tomcat]# docker run -d -P --name tomcat01 tomcat

[root@hadoop101 tomcat]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

12: eth0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

# linux可以ping通tomcat

[root@hadoop101 tomcat]# ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.051 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.040 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.062 ms

^C

--- 172.17.0.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1999ms

rtt min/avg/max/mdev = 0.040/0.051/0.062/0.009 ms

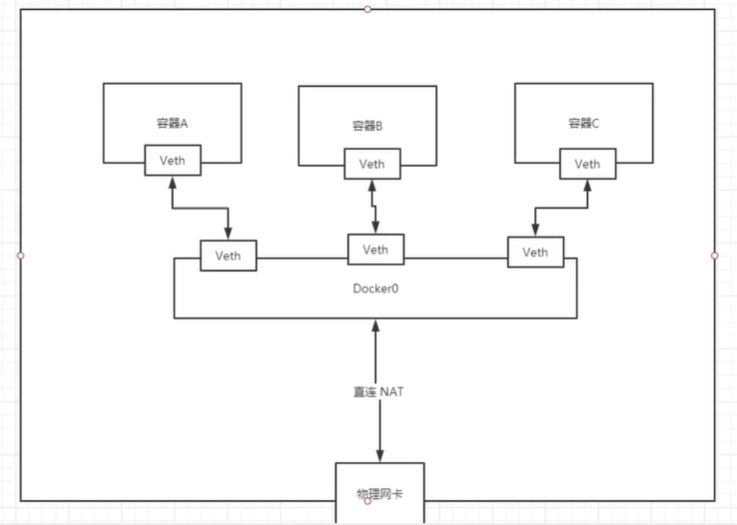

# 原理

# 1、我们每启动一个docker容器,docker就会给docker容器分配一个ip,我们只要安装了docker,就会有一个网卡docker0桥# 接模式,使用的技术是evth-pair技术。

[root@hadoop101 tomcat]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

12: eth0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@hadoop101 tomcat]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:be:ec:f4 brd ff:ff:ff:ff:ff:ff

inet 192.168.109.129/24 brd 192.168.109.255 scope global noprefixroute dynamic ens33

valid_lft 1417sec preferred_lft 1417sec

inet6 fe80::bb54:3383:15df:cdcd/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:b9:66:85:62 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:b9ff:fe66:8562/64 scope link

valid_lft forever preferred_lft forever

13: vethf91952f@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 52:20:e8:a7:8e:64 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::5020:e8ff:fea7:8e64/64 scope link

valid_lft forever preferred_lft forever

# 发现容器内的网卡 eth0@if13 和 宿主机新增的网卡 vethf91952f@if12 是配对的

# evth-pair技术:

# 就是一对的虚拟设备接口,它们都是成对出现的,一端连着协议,一端彼此相连

# evth-pair技术充当了一个桥梁,连接各种学你网络设备

[root@hadoop101 tomcat]# docker network ls

NETWORK ID NAME DRIVER SCOPE

4ef3c81eb3c1 bridge bridge local

33d7079b5b7b host host local

cc349c354a83 none null local

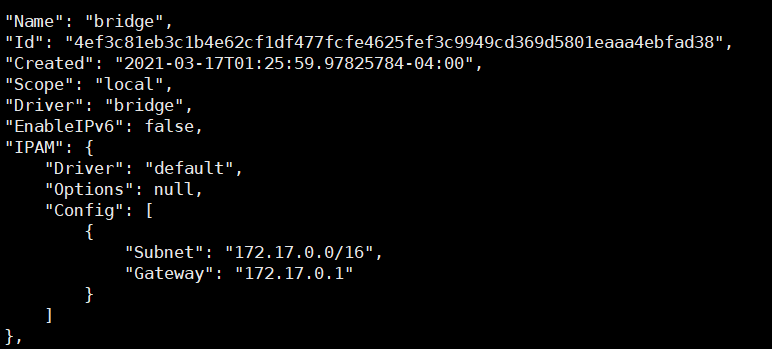

[root@hadoop101 tomcat]# docker network inspect 4ef3c81eb3c1

[

{

"Name": "bridge",

"Id": "4ef3c81eb3c1b4e62cf1df477fcfe4625fef3c9949cd369d5801eaaa4ebfad38",

"Created": "2021-03-17T01:25:59.97825784-04:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"2a66292f2c436c4f00b1d45884ac0b9c76592aa66d8f4682da94c85e412e9270": {

"Name": "tomcat02",

"EndpointID": "f94f672cb84af4f329aad1fb0188d675c62bcffdad3703a50ae0c373898407b0",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

},

"3994fc4f765a58c19636efeb871d1f7ecaffd5c7999385da5dc05c50b26e876f": {

"Name": "tomcat01",

"EndpointID": "26f16121dbf0790ad47efaced820254e8855410770b4fb3332cfbcc68bd07fc6",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"edc9abb00203f5a07fc1f112ff14baf9f4bd9ef9e28c16b7ec64146233dbaa1c": {

"Name": "tomcat03",

"EndpointID": "ec3ac8e502222d3eae1bbd89b03c2be2b213cbb96a64625b8a2a1370debece01",

"MacAddress": "02:42:ac:11:00:04",

"IPv4Address": "172.17.0.4/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

结论:

tomcat01 和 tomcat02 是共用的一个路由器(Docker0)

所有的容器不指定网络的情况下,都是 Docker0 路由的,Docker 会给我们的容器分配一个默认的可用IP。

Docker中所有的网络都是虚拟的。

只要容器删除,对应的一对网卡就没了。

–link

思考一个场景,我们编写了一个微服务,database url = ip;项目不重启,数据库 ip 换掉了,我们希望可以处理这个问题,可以用容器名字来进行访问容器。

[root@hadoop101 tomcat]# docker exec -it tocmat01 ping tomcat02

Error: No such container: tocmat01

# 如何解决

[root@hadoop101 tomcat]# docker run -d -P --name tomcat03 --link tomcat01 tomcat

edc9abb00203f5a07fc1f112ff14baf9f4bd9ef9e28c16b7ec64146233dbaa1c

[root@hadoop101 tomcat]# docker exec -it tomcat03 ping tomcat01

PING tomcat01 (172.17.0.2) 56(84) bytes of data.

64 bytes from tomcat01 (172.17.0.2): icmp_seq=1 ttl=64 time=0.098 ms

64 bytes from tomcat01 (172.17.0.2): icmp_seq=2 ttl=64 time=0.041 ms

# 但是tomcat01 不能 ping 通 tomcat03,反向 ping 不通,只在 tomcat03 里面配置了,没有在 tomcat02 里面配置

# 原因

[root@hadoop101 tomcat]# docker inspect tomcat03

[

{

"Id": "edc9abb00203f5a07fc1f112ff14baf9f4bd9ef9e28c16b7ec64146233dbaa1c",

"Created": "2021-03-17T07:23:27.174413443Z",

···

"Links": [

"/tomcat01:/tomcat03/tomcat01"

]

···

}

]

[root@hadoop101 tomcat]# docker exec -it tomcat03 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.2 tomcat01 3994fc4f765a

172.17.0.4 edc9abb00203

# 可以发现 tomcat03 的 Links 配置,和 tomcat03 的 hosts 文件中已经配置了 tocmat01

# 反观 tomcat01 并没有在 Links 和 hosts文件中配置 tomcat03

[root@hadoop101 tomcat]# docker inspect tomcat01

[

{

"Id": "3994fc4f765a58c19636efeb871d1f7ecaffd5c7999385da5dc05c50b26e876f",

"Created": "2021-03-17T06:37:40.523952043Z",

···

"Links": null

···

}

]

[root@hadoop101 tomcat]# docker exec -it tomcat01 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.2 3994fc4f765a

# --link 只是在 hosts 中配置一个映射

# 不建议使用 --link 使用自定义网络

自定义网络

查看所有的docker网络

[root@hadoop101 tomcat]# docker network ls

NETWORK ID NAME DRIVER SCOPE

4ef3c81eb3c1 bridge bridge local

33d7079b5b7b host host local

cc349c354a83 none null local

网络模式

bridge:桥接 docker(默认,自己创建也使用bridge模式)

none:不配置网络

host:和宿主机共享网络

container:容器网络联通(用的少,局限很大)

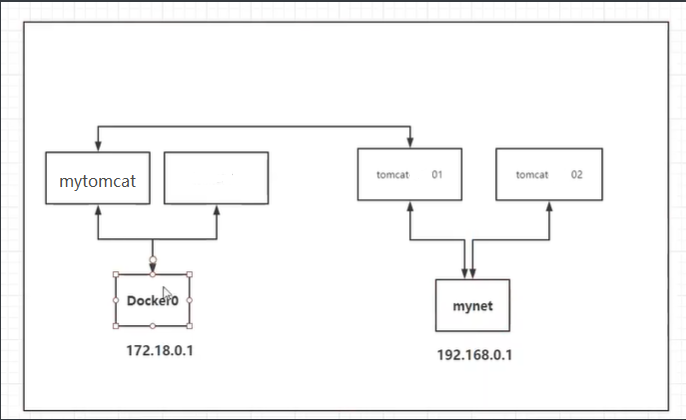

测试

# 我们直接启动的命令有一个默认参数 --net bridge,这个就是 docker0

[root@hadoop101 tomcat]# docker run -d -P --name tomcat01 tomcat

[root@hadoop101 tomcat]# docker run -d -P --name tomcat01 --net bridge tomcat

# docker0 特点:默认,域名不能访问,--link可以打通

# 创建自定义网络

[root@hadoop101 tomcat]# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

b3711d274a3b0c26d0aee921ed5b29f5c4a6aaf56776b84ec5063311dff268a7

[root@hadoop101 tomcat]# docker network ls

NETWORK ID NAME DRIVER SCOPE

4ef3c81eb3c1 bridge bridge local

33d7079b5b7b host host local

b3711d274a3b mynet bridge local

cc349c354a83 none null local

# 使用自己的网络 启动 tomcat

[root@hadoop101 tomcat]# docker run -d -P --name tomcat01 --net mynet tomcat

59b8c40e4e798d3518be484cc689cab83ba7290e5aab8b029a4daa0424fcad72

[root@hadoop101 tomcat]# docker run -d -P --name tomcat02 --net mynet tomcat

b6bd672de6397e4395ebddecc0cf05ee6ebb4ecdceaff39daaf5942bf876c3f1

# 此时 不使用 --link 也可以直接 ping 容器名

# 我们自定义的网络 docker 已经帮我们维护好了对应的关系

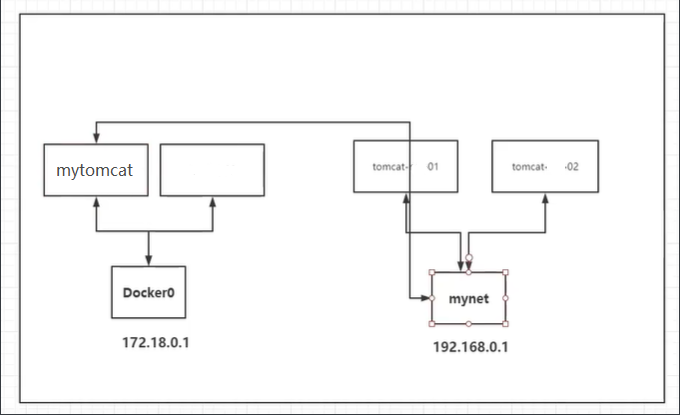

网络连通

用bridge网络(默认)启动mytomcat

[root@hadoop101 tomcat]# docker run -d -P --name mytomcat tomcat

a6c9618e2f00b8d7b1b6c3fcd9a6cf30deb54ef96aa3082af2a8bd9ee1bdd528

此时 mytomcat 访问不到 tomcat01,不在同一个网段

我们需要打通 mytomcat 和 mynet 之间

# 命令 docker network connect

[root@hadoop101 tomcat]# docker network connect --help

Usage: docker network connect [OPTIONS] NETWORK CONTAINER

Connect a container to a network

Options:

--alias strings Add network-scoped alias for the container

--driver-opt strings driver options for the network

--ip string IPv4 address (e.g., 172.30.100.104)

--ip6 string IPv6 address (e.g., 2001:db8::33)

--link list Add link to another container

--link-local-ip strings Add a link-local address for the container

[root@hadoop101 tomcat]# docker network connect mynet mytomcat

[root@hadoop101 tomcat]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "b3711d274a3b0c26d0aee921ed5b29f5c4a6aaf56776b84ec5063311dff268a7",

"Created": "2021-03-17T03:55:00.233549495-04:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"59b8c40e4e798d3518be484cc689cab83ba7290e5aab8b029a4daa0424fcad72": {

"Name": "tomcat01",

"EndpointID": "cc16a828f18d19237d1c3184cdcaa8472124dc4a5a32470b0b2b2afe6bb108cd",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"a6c9618e2f00b8d7b1b6c3fcd9a6cf30deb54ef96aa3082af2a8bd9ee1bdd528": {

"Name": "mytomcat",

"EndpointID": "fe41b3cbc337225edd2aa873c8a79ce7d0c0ec2bb7cd6a3720b824224808bc06",

"MacAddress": "02:42:c0:a8:00:04",

"IPv4Address": "192.168.0.4/16",

"IPv6Address": ""

},

"b6bd672de6397e4395ebddecc0cf05ee6ebb4ecdceaff39daaf5942bf876c3f1": {

"Name": "tomcat02",

"EndpointID": "71baca45e58e0bf252af05124b3ff793161a70c1254eafe684a10bcd361a4e43",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

# 可以看到 mynet 网络中已经加入了mytomat

# 查看 bridge 网络,可以看到仍然有 mytomcat容器的网络信息

[root@hadoop101 tomcat]# docker network inspect bridge

[

{

"Name": "bridge",

"Id": "4ef3c81eb3c1b4e62cf1df477fcfe4625fef3c9949cd369d5801eaaa4ebfad38",

"Created": "2021-03-17T01:25:59.97825784-04:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"a6c9618e2f00b8d7b1b6c3fcd9a6cf30deb54ef96aa3082af2a8bd9ee1bdd528": {

"Name": "mytomcat",

"EndpointID": "6aba23134146e130d2119caf966ec2927c6ca967de8a8be3453936827fec4df3",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

# 一个容器 两个ip

# 可以 ping 通

[root@hadoop101 tomcat]# docker exec -it mytomcat ping tomcat01

PING tomcat01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.074 ms

64 bytes from tomcat01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.097 ms

64 bytes from tomcat01.mynet (192.168.0.2): icmp_seq=3 ttl=64 time=0.059 ms

结论

如果要跨网络操作别人,就要用docker network connect 打通网络

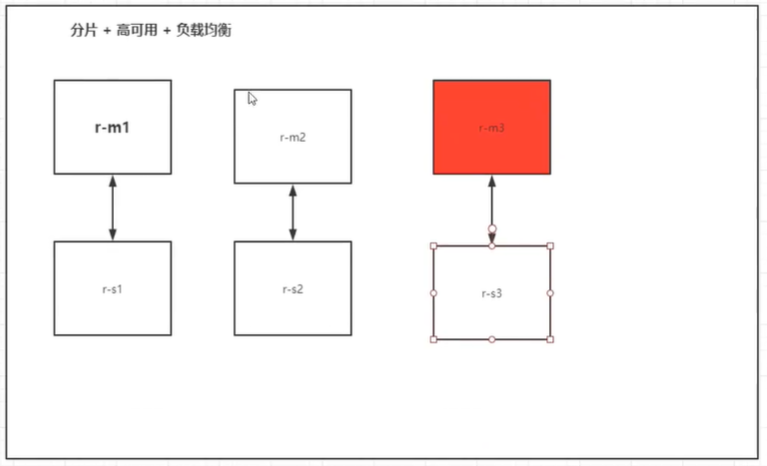

实战:部署Redis集群

当一个主机宕掉后,备份主机立即替代宕掉的主机

# 1、创建一个 redis 网络

[root@hadoop101 tomcat]# docker network create redis --subnet 172.38.0.0/16

6e3e1276d9e452eacdbd08800ab001008232925ab0d29ae0311fe6f302a2afc7

# 2、通过脚本创建6个 redis 服务

for port in $(seq 1 6); \

do \

mkdir -p /mydata/redis/node-${port}/conf

touch /mydata/redis/node-${port}/conf/redis.conf

cat << EOF >>/mydata/redis/node-${port}/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.1${port}

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

EOF

done

# 启动 redis 可编写脚本启动

[root@hadoop101 ~]# docker run -p 6731:6739 -p 16731:16739 --name redis-1 \

-v /mydata/redis/node-1/data:/data \

-v /mydata/redis/node-1/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.11 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

[root@hadoop101 ~]# docker run -p 6732:6739 -p 16732:16739 --name redis-2 \

-v /mydata/redis/node-2/data:/data \

-v /mydata/redis/node-2/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.12 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

[root@hadoop101 ~]# docker run -p 6733:6739 -p 16733:16739 --name redis-3 \

-v /mydata/redis/node-3/data:/data \

-v /mydata/redis/node-3/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.13 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

[root@hadoop101 ~]# docker run -p 6734:6739 -p 16734:16739 --name redis-4 \

-v /mydata/redis/node-4/data:/data \

-v /mydata/redis/node-4/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.14 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

[root@hadoop101 ~]# docker run -p 6735:6739 -p 16735:16739 --name redis-5 \

-v /mydata/redis/node-5/data:/data \

-v /mydata/redis/node-5/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.15 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

[root@hadoop101 ~]# docker run -p 6736:6739 -p 16736:16739 --name redis-6 \

-v /mydata/redis/node-6/data:/data \

-v /mydata/redis/node-6/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.16 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

# 创建 配置 集群

[root@hadoop101 ~]# docker exec -it redis-1 /bin/sh

/data # ls

appendonly.aof nodes.conf

/data # redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.14:6379 172.38.0.15:637

9 172.38.0.16:6379 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.38.0.15:6379 to 172.38.0.11:6379

Adding replica 172.38.0.16:6379 to 172.38.0.12:6379

Adding replica 172.38.0.14:6379 to 172.38.0.13:6379

M: 7e858cd50e750f2d42814b160c9d78aa4cbb6142 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

M: d2fc6219a2b0dc9905103b9f687ff31d3183845b 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

M: 7bed3676139b22c35e3176645a5b35fa351304d4 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

S: 0ec64739fcd0224d8f0096caeb00f3154548ed02 172.38.0.14:6379

replicates 7bed3676139b22c35e3176645a5b35fa351304d4

S: 4e74fb4863f225ccdf56454b24f843d45bb0d7cf 172.38.0.15:6379

replicates 7e858cd50e750f2d42814b160c9d78aa4cbb6142

S: a72ab8971eb2f2f7f30266877d401a364385a417 172.38.0.16:6379

replicates d2fc6219a2b0dc9905103b9f687ff31d3183845b

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

...

>>> Performing Cluster Check (using node 172.38.0.11:6379)

M: 7e858cd50e750f2d42814b160c9d78aa4cbb6142 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 7bed3676139b22c35e3176645a5b35fa351304d4 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: a72ab8971eb2f2f7f30266877d401a364385a417 172.38.0.16:6379

slots: (0 slots) slave

replicates d2fc6219a2b0dc9905103b9f687ff31d3183845b

S: 0ec64739fcd0224d8f0096caeb00f3154548ed02 172.38.0.14:6379

slots: (0 slots) slave

replicates 7bed3676139b22c35e3176645a5b35fa351304d4

M: d2fc6219a2b0dc9905103b9f687ff31d3183845b 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 4e74fb4863f225ccdf56454b24f843d45bb0d7cf 172.38.0.15:6379

slots: (0 slots) slave

replicates 7e858cd50e750f2d42814b160c9d78aa4cbb6142

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

# 查看集群信息

/data # redis-cli -c

127.0.0.1:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:125

cluster_stats_messages_pong_sent:130

cluster_stats_messages_sent:255

cluster_stats_messages_ping_received:125

cluster_stats_messages_pong_received:125

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:255

127.0.0.1:6379> cluster nodes

7bed3676139b22c35e3176645a5b35fa351304d4 172.38.0.13:6379@16379 master - 0 1615974158000 3 connected 10923-16383

a72ab8971eb2f2f7f30266877d401a364385a417 172.38.0.16:6379@16379 slave d2fc6219a2b0dc9905103b9f687ff31d3183845b 0 1615974158719 6 connected

0ec64739fcd0224d8f0096caeb00f3154548ed02 172.38.0.14:6379@16379 slave 7bed3676139b22c35e3176645a5b35fa351304d4 0 1615974160026 4 connected

d2fc6219a2b0dc9905103b9f687ff31d3183845b 172.38.0.12:6379@16379 master - 0 1615974159725 2 connected 5461-10922

4e74fb4863f225ccdf56454b24f843d45bb0d7cf 172.38.0.15:6379@16379 slave 7e858cd50e750f2d42814b160c9d78aa4cbb6142 0 1615974159000 5 connected

7e858cd50e750f2d42814b160c9d78aa4cbb6142 172.38.0.11:6379@16379 myself,master - 0 1615974158000 1 connected 0-5460

# 赋值,可以看到处理的是 redis-3 master主机

127.0.0.1:6379> set a b

-> Redirected to slot [15495] located at 172.38.0.13:6379

OK

# 现在把 redis-3 停止

[smile@hadoop101 /]$ sudo docker stop redis-3

redis-3

# 回到 redis 的界面 连接后 get a 可以得到 b

172.38.0.13:6379> get a

Error: Operation timed out

/data # redis-cli -c

127.0.0.1:6379> get a

-> Redirected to slot [15495] located at 172.38.0.14:6379

"b"

# 查看 cluter nodes,发现 13主机故障,14是变成了 master

172.38.0.14:6379> cluster nodes

0ec64739fcd0224d8f0096caeb00f3154548ed02 172.38.0.14:6379@16379 myself,master - 0 1615974792000 7 connected 10923-16383

7bed3676139b22c35e3176645a5b35fa351304d4 172.38.0.13:6379@16379 master,fail - 1615974571089 1615974568552 3 connected

a72ab8971eb2f2f7f30266877d401a364385a417 172.38.0.16:6379@16379 slave d2fc6219a2b0dc9905103b9f687ff31d3183845b 0 1615974793716 6 connected

7e858cd50e750f2d42814b160c9d78aa4cbb6142 172.38.0.11:6379@16379 master - 0 1615974793514 1 connected 0-5460

d2fc6219a2b0dc9905103b9f687ff31d3183845b 172.38.0.12:6379@16379 master - 0 1615974793000 2 connected 5461-10922

4e74fb4863f225ccdf56454b24f843d45bb0d7cf 172.38.0.15:6379@16379 slave 7e858cd50e750f2d42814b160c9d78aa4cbb6142 0 1615974792708 5 connected